Table of Contents

- Betterzon - Software Requirements Specification

- Table of Contents

- 1. Introduction

- 2. Overall Description

- 2.2 Product perspective

- 3. Specific Requirements

- 3.1 Functionality

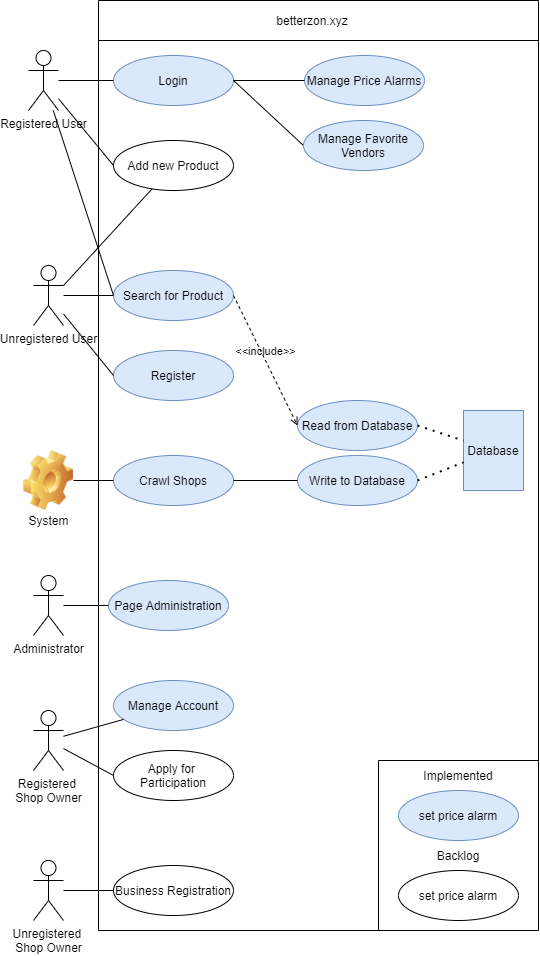

- 3.1.4 Use Cases

- 3.2 Functionality - User Interface

- 3.3 Usability

- 3.4 Reliability

- 3.5 Performance

- 3.6 Supportability

- 3.7 Design Constraints

- 3.8 Online User Documentation and Help System Requirements

- 3.9 Purchased Components

- 3.10 Licensing Requirements

- 3.11 Legal, Copyright and other Notices

- 3.12 Applicable Standards

- 4. Supporting Information

This file contains Unicode characters that might be confused with other characters. If you think that this is intentional, you can safely ignore this warning. Use the Escape button to reveal them.

Betterzon - Software Requirements Specification

Table of Contents

1. Introduction

1.1 Purpose

This Software Requirment Specification (SRS) describes all specifications for the application "Betterzon". It includes an overview about this project and its vision, detailled information about the planned features and boundary conditions of the development process.

1.2 Scope

The project is going to be realized as a Web Application. Planned functions include:

- Searching for products, that are also listed on Amazon

- Finding a better price for the searched item

- Finding a nearby store, that has the item in stock

- Registering for the service

1.3 Definitions, Acronyms and Abbreviations

| Term | |

|---|---|

| SRS | Software Requirements Specification |

1.4 References

| Title | Date |

|---|---|

| Blog | 19/10/2020 |

| GitHub | 17/10/2020 |

| Jenkins | 19/10/2018 |

| AngularJS | 19/10/2020 |

| Use Case Diagram | 19/10/2020 |

1.5 Overview

2. Overall Description

2.1 Vision

We plan to develop a website that provides you with alternative places to buy other than Amazon. In the beginning, it’s just going to be online stores that may even have better prices than Amazon for some stuff, but we also plan to extend this to your local stores in the future. That way, you can quickly see the prices compared to each other and where the next local store is that offers the same product for the same price or maybe even less.

2.2 Product perspective

The website consists of four main components:

- Frontend

- Backend

- Database

- Webcrawler

2.3 User characteristics

We aim for the same kind of users that amazon has today. So we need to make sure, that we have a very good usability.

3. Specific Requirements

3.1 Functionality

3.1.1 Functionality – Backend

The backend should read data from the database and serve it to the frontend.

3.1.2 Functionality – Database

The database stores the findings from the webcrawler. Also a history is stored.

3.1.3 Functionality – Webcrawler

The webcrawler crawls a predefined set of websites to extract for a predefined list of products.

3.1.4 Use Cases

The use cases are linked in the wiki.

3.2 Functionality - User Interface

3.2.1 Functionality - Frontend

The User interface should be intuitive and appealing. It should be lightweight and not overloaded with features so anyone is easily able to use our service.

3.3 Usability

We aim for excellent usability. Every person that is able to use websites like amazon should be able to use our website.

3.4 Reliability

We can of course not guarantee that the prices we crawl are correct or up-to-date, so we can give no warranty regarding this.

3.4.1 Availability

99.2016%

3.5 Performance

The website should be as performant as most modern websites are, so there should not be any waiting times >1s. The web crawler will run every night so performance won't be an issue here. The website will be usable while the crawler is running as it is just adding history points to the database.

3.5.1 Response time

Response time should be very low, on par with other modern websites. Max. 50ms

3.5.2 Throughput

The user traffic should not exceed 100 MBit/s. As we load pictures from a different server, the throughput is split, improving the performance for all users.

3.5.3 Capacity

The size of the database should not exceed 100GB in the first iteration.

3.5.4 Resource utilization

We plan to run the service on 2 vServers, one of which runs the webserver and database and both of them running the web crawler. The crawler will also be implemented in a way that it can easily be run on more servers if needed.

3.6 Supportability

The service will be online as long as the domain belongs to us. We cannot guarantee that it will be online for a long time after the final presentation as this would imply costs for both the domain and the servers.

3.7 Design Constraints

Standard Angular- and ExpressJS patterns will be followed.

3.7.1 Development tools

IntelliJ Ultimate GitHub Jenkins

3.7.2 Supported Platforms

All platforms that can run a recent browser.

3.8 Online User Documentation and Help System Requirements

The user documentation will be a part of this project documentation and will therefore also be hosted in this wiki.

3.9 Purchased Components

As we only use open-source tools like mariaDB and Jenkins, the only purchased components will be the domain and the servers.

3.10 Licensing Requirements

The project is licensed under the MIT License.

3.11 Legal, Copyright and other Notices

As stated in the license, everyone is free to use the project, given that they include our copyright notice in their product.

3.12 Applicable Standards

We will follow standard code conventions for TypeScript and Python. A more detailed description of naming conventions etc. will be published as a seperate article in this wiki.

4. Supporting Information

N/A